TP Bench – Theoretical Physics Benchmark for AI

TPBench is a curated dataset and evaluation suite designed to measure the reasoning capabilities of AI models in theoretical physics. Our test problems span multiple difficulty levels—from undergraduate to frontier research—and cover topics such as cosmology, high-energy theory, general relativity, and more. By providing a unified framework for problem-solving and auto-verifiable answers, TPBench aims to drive progress in AI-based research assistance for theoretical physics.

Read the TPBench Paper on arxiv (MLST journal version)

Access Public Dataset on Huggingface

Read our Paper on Test-time Scaling Techniques in Theoretical Physics (NeurIPS 25 ML4PS)

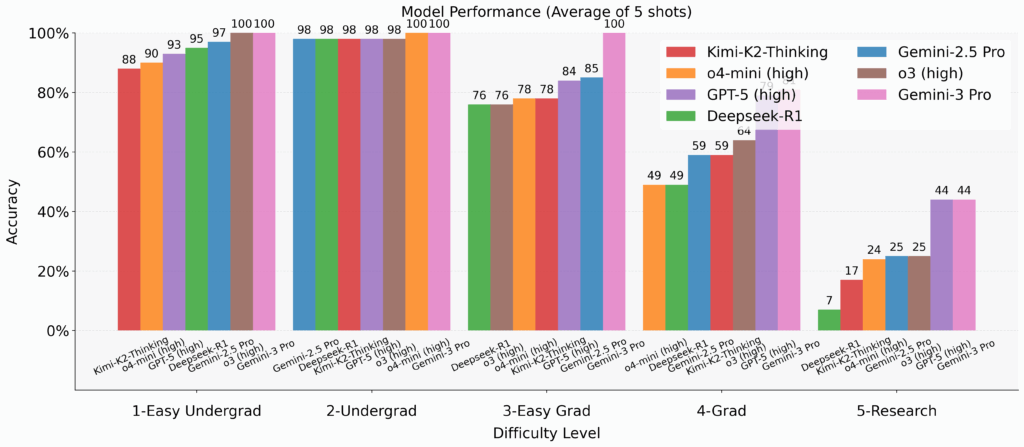

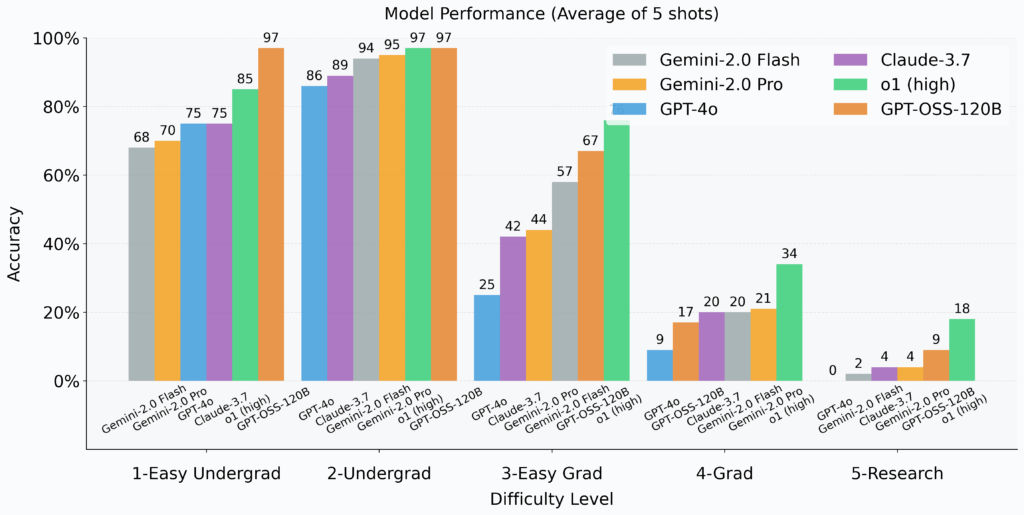

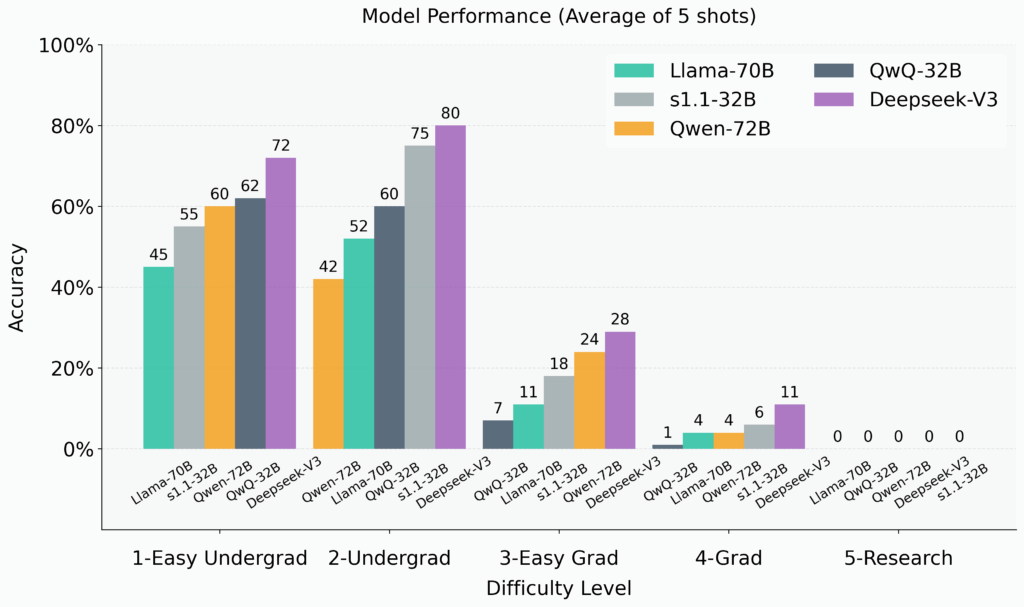

Current Model Performance

Frequently Asked Questions

Where can I see the solutions that models provided for the public problems?

You can access the problem content and the model solutions here: Public Problems and Model Solutions

Are you planning to evaluate on more models?

Yes, we will add new SOTA models to the evaluation as long as they release API access or model weights.

How can I contact you?

Please email us at research@tpbench.org if you have questions or concerns.

Interested in contributing to the dataset?

We invite interested researchers to contribute new problems and collaborate on future TPBench updates. Feel free to contact us via research@tpbench.org.

How can I access the full data set?

We cannot make the full dataset publicly available to prevent potential data leakage into future model training. However, if you wish to evaluate your model using our private dataset, please contact us. Additionally, for collaborative projects, we can provide full dataset access under controlled conditions to mitigate data leakage risks.